| Version 34 (modified by , 8 months ago) ( diff ) |

|---|

Modifying the model code for the ensemble integration

Implementation Guide

- Main page

- Adaptating the parallelization

- Initializing of PDAF

- Modifications for ensemble integration

- Flexible implementation variant

- Implementing the analysis step

- Memory and timing information

Contents of this page

- Overview

-

Calling

PDAF_init_forecast -

Inserting

assimilate_pdafinto the model code -

Using

assimilate_pdaf -

User-supplied routines

-

next_observation_pdaf(next_observation_pdaf.F90) -

U_next_observation(next_observation_pdaf.F90) -

U_distribute_state(distribute_state_pdaf.F90) -

U_prepoststep(prepoststep_ens_pdaf.F90) -

next_observation_pdaf(next_observation_pdaf.F90) -

U_distribute_state(distribute_state_pdaf.F90) -

U_prepoststep(prepoststep_ens_pdaf.F90) -

U_distribute_state(distribute_state_pdaf.F90) -

U_prepoststep(prepoststep_ens_pdaf.F90)

-

Overview

On the Page on the Implementation Concept of the Online Mode we explained the modification of the model code for the ensemble integration. Here, we focus on the fully parallel implementation variant, which only needs minor code changes. In this case, the number of model tasks is equal to the ensemble size such that each model task integrates exactly one model state. In this case, the model always moves forward in time. As before, we refer to the example code for the online mode in tutorial/online_2D_parallelmodel and tutorial/online_2D_serialmodel.

| The more complex flexible parallelization variant is described separately on the page: Modification of the model code for the ''flexible'' implementation variant. |

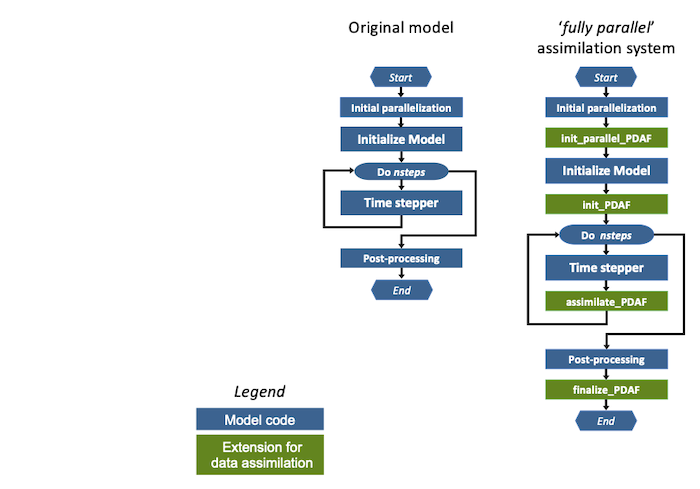

The extension to the model code for the fully parallel implementation is depicted in the figure below (See also the page on the implementation concept of the online mode.) As at this stage of the implementation the calls to init_parallel_pdaf and init_pdaf are already inserted into the code, the difference is in the addition of routines for the time stepping. The parallel ensemble integration is enabled by the configuration of the parallelization that was done by init_parallel_pdaf in the first step of the implementation. This does not require further code changes.

For the fully parallel parallelization variant, the number of time steps nsteps shown in Figure 1 is the overall length of the data assimilation run. This can include several data assimilation cycles of forecasts and analysis steps.

Figure 1: (left) Generic structure of a model code, (right) modified structure for fully-parallel data assimilation system with PDAF. The figures assumes that the model is parallelized, such that it initializes its parallelization in the step initial parallelization. If the model is not parallelized this step does not exist.

There are two further steps required now:

- We need to initialize the ensemble forecasting. Thus, we need to set how many time steps need to be done until the first observations are assimilated. In addition, we need to write the ensemble state vector values into the model fields. For these operations, we call the routine

PDAF_init_forecastin the routineinit_pdaf. - To enable that the analysis can be performed, we then have to insert the routine

assimilate_pdafinto the model code.

Both are described below.

Calling PDAF_init_forecast

The routine PDAF_init_forecast is called at the end of the routine init_pdaf that was discussed on the page on initializing PDAF. The main purpose of this routine is to initialize the model fields for all model tasks from the ensemlbe of state vectors. This is done in the call-back routine distribute_state_pdaf. Further, the routine calls the call-back-routine prepoststep_pdaf. This pre/postep routine provides the user access to the initial ensemble. The routine also returns the number of time steps for the initial forecast phase, and an exit flag. These variables are usually not used in the user code in the fully parallel variant, but only internally by PDAF.

The interface of PDAF_init_forecast is:

SUBROUTINE PDAF_init_forecast(nsteps, timenow, doexit, &

next_observation_pdaf, distribute_state_pdaf, &

prepoststep_pdaf, status)

with the arguments (where the bold names show the arguments relevant to the user for the fully-parallel variant):

nsteps: An integer specifying upon exit the number of time steps to be performedtimenow: A real specifying upon exit the current model time.doexit: An integer variable defining whether the assimilation process is completed. (1: exit, 0: continue).- U_next_observation: The name of a user supplied routine that initializes the variables

nsteps,timenow, anddoexit - U_distribute_state: The name of a user supplied routine that initializes the model fields from the array holding the ensemble of model state vectors

- U_prepoststep: The name of a user supplied routine that is called before and after the analysis step. Here the user has the possibility to access the state ensemble and can e.g. compute estimated variances or can write the ensemble states the state estimate into files.

status: The integer status flag. It is zero, if the routine is exited without errors. We recommend to check the value.

The user-supplied routines are described further below.

Inserting assimilate_pdaf into the model code

The right place to insert the routine assimilate_pdaf into the model code is at the end of the model time stepping loop, thus when the model completed the computation of a single time step. In most cases, this is just before the 'END DO' in the model source code. However, there might be special cases, where the model does some additional operations so that the call to assimilate_pdaf should be insered somewhat earlier.

Using assimilate_pdaf

The purpose of assimilate_pdaf is to call the universal PDAF-core routine PDAF3_assimilate (or a more specific varant of this routine). The arguments of PDAF3_assimilate are mainly the names of user-supplied call-back routines, except from an argument for the status flag. These names are specified in assimilate_pdaf as 'external'.

The routine assimilate_pdaf is called at each time step of the model forecast. This allows to, e.g., apply incremental analysis updates.

Details on the implementations of the user-routines for the analysis step ar provided in the following Page on implementating the analysis step.

User-supplied routines

Here, we discuss the user-supplied routines that are arguments of PDAF_init_forecast.

In the section titles below we provide the name of the template file in parentheses.

next_observation_pdaf (next_observation_pdaf.F90)

The interface for this routine is

SUBROUTINE next_observation_pdaf(stepnow, nsteps, doexit, timenow) INTEGER, INTENT(in) :: stepnow ! The current time step INTEGER, INTENT(out) :: nsteps ! Number of time steps until next obs INTEGER, INTENT(out) :: doexit ! Whether to exit forecasting (1 for exit) REAL, INTENT(out) :: timenow ! Current model (physical) time (canbe defined freely by the user.

The routine is called by Here, only the user-supplied routines are discussed that are required at this stage of the implementation (that is, the ensemble integration). For testing (see Compilation and testing), all routines need to exist, but only those described here in detail need to be implemented with functionality.

To indicate user-supplied routines we use the prefix U_. In the tutorials in tutorial/ and in the template directory templates/ these routines exist without the prefix, but with the extension _pdaf. The files are named correspondingly. In the section titles below we provide the name of the template file in parentheses.

The user-supplied routines are in general identical for the 'fully parallel' and 'flexible' implementation variants. The only difference is in U_next_observation as is described below.

U_next_observation (next_observation_pdaf.F90)

The interface for this routine is

SUBROUTINE next_observation(stepnow, nsteps, doexit, timenow) INTEGER, INTENT(in) :: stepnow ! Number of the current time step INTEGER, INTENT(out) :: nsteps ! Number of time steps until next obs INTEGER, INTENT(out) :: doexit ! Whether to exit forecasting (1 for exit) REAL, INTENT(out) :: timenow ! Current model (physical) time

The routine is called once at the beginning of each forecast phase. It is executed by all processes that participate in the model integrations.

Based on the information of the current time step, the routine has to define the number of time steps nsteps for the next forecast phase. For the 'fully parallel' data assimilation variant the flag doexit is not used and does not need to be set. timenow is the current model time. However, for the 'fully parallel' data assimilation variant, this value is not relevant.

Some hints:

- If the time interval between successive observations is known,

nstepscan be simply initialized by dividing the time interval by the size of the time step - At the first call to

U_next_obsthe variabletimenowcan be initialized with the current model time. At the next call a forecast phase has been completed. Thus, the new value oftimenowfollows from the timer interval for the previous forecast phase. doexitis not relevant for the fully-parallel implementation. It is recommended to setdoexit=0in all cases.- If

nsteps=0ordoexit=1is set, the ensemble state will not be distributed by PDAF (thusdistribute_stateis not called). If one intends to proceed with ensemble forecasting, one has to set nsteps to a value >0 anddoexit=0. If nsteps is set to a value larger than the last time step of the model no further analysis step will be performed.

U_distribute_state (distribute_state_pdaf.F90)

The interface for this routine is

SUBROUTINE distribute_state(dim_p, state_p) INTEGER, INTENT(in) :: dim_p ! State dimension for PE-local model sub-domain REAL, INTENT(inout) :: state_p(dim_p) ! State vector for PE-local model sub-domain

This routine is called during the forecast phase as many times as there are states to be integrated by a model task. Again, the routine is executed by all processes that belong to model tasks.

When the routine is called a state vector state_p and its size dim_p are provided. As the user has defined how the model fields are stored in the state vector, one can initialize the model fields from this information. If the model is not parallelized, state_p will contain a full state vector. If the model is parallelized using domain decomposition, state_p will contain the part of the state vector that corresponds to the model sub-domain for the calling process.

Some hints:

- If the state vector does not include all model fields, it can be useful to keep a separate array to store those additional fields. This array has to be kept separate from PDAF, but can be defined using a module like

mod_assimilation.

U_prepoststep (prepoststep_ens_pdaf.F90)

The interface of the routine is identical for all filters. However, the particular operations that are performed in the routine can be specific for each filter algorithm. Here, we exemplify the interface on the example of the ESTKF and LESKTF filters.

The interface for this routine is

SUBROUTINE prepoststep(step, dim_p, dim_ens, dim_ens_p, dim_obs_p, &

state_p, Uinv, ens_p, flag)

INTEGER, INTENT(in) :: step ! Current time step

! (When the routine is called before the analysis -step is provided.)

INTEGER, INTENT(in) :: dim_p ! PE-local state dimension

INTEGER, INTENT(in) :: dim_ens ! Size of state ensemble

INTEGER, INTENT(in) :: dim_ens_p ! PE-local size of ensemble

INTEGER, INTENT(in) :: dim_obs_p ! PE-local dimension of observation vector

REAL, INTENT(inout) :: state_p(dim_p) ! PE-local forecast/analysis state

! The array 'state_p' is not generally not initialized.

! It can be used freely in this routine.

REAL, INTENT(inout) :: Uinv(dim_ens-1, dim_ens-1) ! Inverse of matrix U

REAL, INTENT(inout) :: ens_p(dim_p, dim_ens) ! PE-local state ensemble

INTEGER, INTENT(in) :: flag ! PDAF status flag

The routine U_prepoststep is called once at the beginning of the assimilation process. In addition, it is called during the assimilation cycles before the analysis step and after the ensemble transformation. The routine is called by all filter processes (that is filterpe=1).

The routine provides for the user the full access to the ensemble of model states. Thus, user-controlled pre- and post-step operations can be performed. For example the forecast and the analysis states and ensemble covariance matrix can be analyzed, e.g. by computing the estimated variances. If the smoother is used, also the smoothed ensembles can be analyzed. In addition, the estimates can be written to disk.

Hint:

- If a user considers to perform adjustments to the estimates (e.g. for balances), this routine is the right place for it.

- Only for the SEEK filter the state vector (

state_p) is initialized. For all other filters, the array is allocated, but it can be used freely during the execution ofU_prepoststep. - The interface through which

U_prepoststepis called does not include the array of smoothed ensembles. In order to access the smoother ensemble array one has to set a pointer to it using a call to the routinePDAF_get_smootherens(see page on auxiliary routines)Here, only the user-supplied routines are discussed that are required at this stage of the implementation (that is, the ensemble integration). For testing (see Compilation and testing), all routines need to exist, but only those described here in detail need to be implemented with functionality.

To indicate user-supplied routines we use the prefix U_. In the tutorials in tutorial/ and in the template directory templates/ these routines exist without the prefix, but with the extension _pdaf. The files are named correspondingly. In the section titles below we provide the name of the template file in parentheses.

The user-supplied routines are in general identical for the 'fully parallel' and 'flexible' implementation variants. The only difference is in U_next_observation as is described below.

next_observation_pdaf (next_observation_pdaf.F90)

The interface for this routine is

SUBROUTINE next_observation_pdaf(stepnow, nsteps, doexit, timenow) INTEGER, INTENT(in) :: stepnow ! Number of the current time step INTEGER, INTENT(out) :: nsteps ! Number of time steps until next obs INTEGER, INTENT(out) :: doexit ! Whether to exit forecasting (1 for exit) REAL, INTENT(out) :: timenow ! Current model (physical) time

The routine is called by PDAF_init_forecast and later at the beginning of each forecast phase. It is executed by all processes that participate in the model integrations.

Based on the information of the current time step, the routine has to define the number of time steps nsteps for the next forecast phase. For the 'fully parallel' data assimilation variant the flag doexit is not used in the user code. timenow is the current model time. For the 'fully parallel' data assimilation variant, PDAF does not use this value. The user can either ignore it (setting to to 0.0), or could use it freely to indicate the model time.

Some hints:

- Usually, the time interval between successive observations is known. Then,

nstepscan be simply initialized by dividing the time interval by the size of the time step. - At the first call to

next_observation_pdafthe variabletimenowcan be initialized with the current model time. At the next call a forecast phase has been completed. Thus, the new value oftimenowfollows from the timer interval for the previous forecast phase and can be incremented accordingly doexitis not relevant for the fully-parallel implementation. It is recommended to setdoexit=0in all cases.- If

nsteps=0ordoexit=1is set, the ensemble state will not be distributed by PDAF (thusdistribute_stateis not called). If one intends to proceed with ensemble forecasting, one has to set nsteps to a value >0 anddoexit=0. Ifnstepsis set so that it specifies a time step larger than the last overall time step of the assimilation run, the numnber of time steps in the final forecast will be the total number of steps.

U_distribute_state (distribute_state_pdaf.F90)

The interface for this routine is

SUBROUTINE distribute_state(dim_p, state_p) INTEGER, INTENT(in) :: dim_p ! State dimension for PE-local model sub-domain REAL, INTENT(inout) :: state_p(dim_p) ! State vector for PE-local model sub-domain

This routine is called during the forecast phase as many times as there are states to be integrated by a model task. Again, the routine is executed by all processes that belong to model tasks.

When the routine is called a state vector state_p and its size dim_p are provided. As the user has defined how the model fields are stored in the state vector, one can initialize the model fields from this information. If the model is not parallelized, state_p will contain a full state vector. If the model is parallelized using domain decomposition, state_p will contain the part of the state vector that corresponds to the model sub-domain for the calling process.

Some hints:

- If the state vector does not include all model fields, it can be useful to keep a separate array to store those additional fields. This array has to be kept separate from PDAF, but can be defined using a module like

mod_assimilation.

U_prepoststep (prepoststep_ens_pdaf.F90)

The interface of the routine is identical for all filters. However, the particular operations that are performed in the routine can be specific for each filter algorithm. Here, we exemplify the interface on the example of the ESTKF and LESKTF filters.

The interface for this routine is

SUBROUTINE prepoststep(step, dim_p, dim_ens, dim_ens_p, dim_obs_p, &

state_p, Uinv, ens_p, flag)

INTEGER, INTENT(in) :: step ! Current time step

! (When the routine is called before the analysis -step is provided.)

INTEGER, INTENT(in) :: dim_p ! PE-local state dimension

INTEGER, INTENT(in) :: dim_ens ! Size of state ensemble

INTEGER, INTENT(in) :: dim_ens_p ! PE-local size of ensemble

INTEGER, INTENT(in) :: dim_obs_p ! PE-local dimension of observation vector

REAL, INTENT(inout) :: state_p(dim_p) ! PE-local forecast/analysis state

! The array 'state_p' is not generally not initialized.

! It can be used freely in this routine.

REAL, INTENT(inout) :: Uinv(dim_ens-1, dim_ens-1) ! Inverse of matrix U

REAL, INTENT(inout) :: ens_p(dim_p, dim_ens) ! PE-local state ensemble

INTEGER, INTENT(in) :: flag ! PDAF status flag

The routine U_prepoststep is called once at the beginning of the assimilation process. In addition, it is called during the assimilation cycles before the analysis step and after the ensemble transformation. The routine is called by all filter processes (that is filterpe=1).

The routine provides for the user the full access to the ensemble of model states. Thus, user-controlled pre- and post-step operations can be performed. For example the forecast and the analysis states and ensemble covariance matrix can be analyzed, e.g. by computing the estimated variances. If the smoother is used, also the smoothed ensembles can be analyzed. In addition, the estimates can be written to disk.

Hint:

- If a user considers to perform adjustments to the estimates (e.g. for balances), this routine is the right place for it.

- Only for the SEEK filter the state vector (

state_p) is initialized. For all other filters, the array is allocated, but it can be used freely during the execution ofU_prepoststep. - The interface through which

U_prepoststepis called does not include the array of smoothed ensembles. In order to access the smoother ensemble array one has to set a pointer to it using a call to the routinePDAF_get_smootherens(see page on auxiliary routines)once at the beginning of each forecast phase. It is executed by all processes that participate in the model integrations.

Based on the information of the current time step, the routine has to define the number of time steps nsteps for the next forecast phase. For the 'fully parallel' data assimilation variant the flag doexit is not used and does not need to be set. timenow is the current model time. However, for the 'fully parallel' data assimilation variant, this value is not relevant.

Some hints:

- If the time interval between successive observations is known,

nstepscan be simply initialized by dividing the time interval by the size of the time step - At the first call to

U_next_obsthe variabletimenowcan be initialized with the current model time. At the next call a forecast phase has been completed. Thus, the new value oftimenowfollows from the timer interval for the previous forecast phase. doexitis not relevant for the fully-parallel implementation. It is recommended to setdoexit=0in all cases.- If

nsteps=0ordoexit=1is set, the ensemble state will not be distributed by PDAF (thusdistribute_stateis not called). If one intends to proceed with ensemble forecasting, one has to set nsteps to a value >0 anddoexit=0. If nsteps is set to a value larger than the last time step of the model no further analysis step will be performed.

U_distribute_state (distribute_state_pdaf.F90)

The interface for this routine is

SUBROUTINE distribute_state(dim_p, state_p) INTEGER, INTENT(in) :: dim_p ! State dimension for PE-local model sub-domain REAL, INTENT(inout) :: state_p(dim_p) ! State vector for PE-local model sub-domain

This routine is called during the forecast phase as many times as there are states to be integrated by a model task. Again, the routine is executed by all processes that belong to model tasks.

When the routine is called a state vector state_p and its size dim_p are provided. As the user has defined how the model fields are stored in the state vector, one can initialize the model fields from this information. If the model is not parallelized, state_p will contain a full state vector. If the model is parallelized using domain decomposition, state_p will contain the part of the state vector that corresponds to the model sub-domain for the calling process.

Some hints:

- If the state vector does not include all model fields, it can be useful to keep a separate array to store those additional fields. This array has to be kept separate from PDAF, but can be defined using a module like

mod_assimilation.

U_prepoststep (prepoststep_ens_pdaf.F90)

The interface of the routine is identical for all filters. However, the particular operations that are performed in the routine can be specific for each filter algorithm. Here, we exemplify the interface on the example of the ESTKF and LESKTF filters.

The interface for this routine is

SUBROUTINE prepoststep(step, dim_p, dim_ens, dim_ens_p, dim_obs_p, &

state_p, Uinv, ens_p, flag)

INTEGER, INTENT(in) :: step ! Current time step

! (When the routine is called before the analysis -step is provided.)

INTEGER, INTENT(in) :: dim_p ! PE-local state dimension

INTEGER, INTENT(in) :: dim_ens ! Size of state ensemble

INTEGER, INTENT(in) :: dim_ens_p ! PE-local size of ensemble

INTEGER, INTENT(in) :: dim_obs_p ! PE-local dimension of observation vector

REAL, INTENT(inout) :: state_p(dim_p) ! PE-local forecast/analysis state

! The array 'state_p' is not generally not initialized.

! It can be used freely in this routine.

REAL, INTENT(inout) :: Uinv(dim_ens-1, dim_ens-1) ! Inverse of matrix U

REAL, INTENT(inout) :: ens_p(dim_p, dim_ens) ! PE-local state ensemble

INTEGER, INTENT(in) :: flag ! PDAF status flag

The routine U_prepoststep is called once at the beginning of the assimilation process. In addition, it is called during the assimilation cycles before the analysis step and after the ensemble transformation. The routine is called by all filter processes (that is filterpe=1).

The routine provides for the user the full access to the ensemble of model states. Thus, user-controlled pre- and post-step operations can be performed. For example the forecast and the analysis states and ensemble covariance matrix can be analyzed, e.g. by computing the estimated variances. If the smoother is used, also the smoothed ensembles can be analyzed. In addition, the estimates can be written to disk.

Hint:

- If a user considers to perform adjustments to the estimates (e.g. for balances), this routine is the right place for it.

- Only for the SEEK filter the state vector (

state_p) is initialized. For all other filters, the array is allocated, but it can be used freely during the execution ofU_prepoststep. - The interface through which

U_prepoststepis called does not include the array of smoothed ensembles. In order to access the smoother ensemble array one has to set a pointer to it using a call to the routinePDAF_get_smootherens(see page on auxiliary routines)