Adapting a model's parallelization for PDAF

Online Mode: Implementation Guide

- Main page

- Adapting the parallelization

- Initializing PDAF

- Modifications for ensemble integration

- Implementing the analysis step

- Memory and timing information

Contents of this page

| This is an outdated page probably reached through an outdated link. The correct page is likely: * For PDAF3: OnlineAdaptParallelization_PDAF3 * For PDAF2: AdaptParallelization_PDAF23 |

Overview

The PDAF release provides example code for the online mode in tutorial/online_2D_serialmodel and tutorial/online_2D_parallelmodel. We refer to this code to use it as a basis.

In the tutorial code and the templates in templates/online, the parallelization is initialized in the routine init_parallel_pdaf (file init_parallel_pdaf.F90). The required variables are defined in mod_parallel.F90. These files can be used as templates.

Like many numerical models, PDAF uses the MPI standard for the parallelization. For the case of a parallelized model, we assume in the description below that the model is parallelized using MPI. If the model is parallelized using OpenMP, one can follow the explanations for a non-parallel model below.

As explained on the page on the Implementation concept of the online mode, PDAF supports a 2-level parallelization. First, the numerical model can be parallelized and can be executed using several processors. Second, several model tasks can be computed in parallel, i.e. a parallel ensemble integration can be performed. We need to configure the parallelization so that more than one model task can be computed.

There are two possible cases regarding the parallelization for enabling the 2-level parallelization:

- The model itself is parallelized using MPI

- In this case we need to adapt the parallelization of the model

- The model is not parallelized or uses only shared-memory parallelization using OpenMP

- In this case we need to add parallelization

Adaptions for a parallelized model

If the online mode is implemented with a parallelized model, one has to ensure that the parallelization can be split to perform the parallel ensemble forecast. For this, one has to check the model source code and potentially adapt it.

The adaptions in short

If you are experienced with MPI, the steps are the following:

- Find the call to

MPI_init - Insert the call

init_parallel_pdafdirectly afterMPI_init. - Check whether the model uses

MPI_COMM_WORLD.- If yes, then replace

MPI_COMM_WORLDin all places exceptMPI_abortandMPI_finalizeby a user-defined communicator (we call itCOMM_mymodelhere), which can be initialized asCOMM_mymodel=MPI_COMM_WORLD. - If no, then take note of the name of the communicator variable (we assume it's

COMM_mymodel). The next steps are valid if the model usesCOMM_mymodelin a way that all processes of the program are included (thus analogous toMPI_COMM_WORLD). If the model is using less processes, this is a special case, which we discuss further below.

- If yes, then replace

- Adapt

init_parallel_pdafso that at the end of this routine you setCOMM_mymodel=COMM_model. Potentially, also set the rank and size variables of the model, respectively, bymype_modelandnpes_model. - The number of model tasks in variable

n_modeltasksis required byinit_parallel_pdafto perform commucator splitting. In the tutorial code we added a command-line parsing to set the variable (it is parsing fordim_ens). One could also read the value from a configuration file.

Details on adapting a parallelized model

Any program parallelized with MPI will need to call MPI_Init for the initialziation of MPI. Frequently, the parallelization of a model is initialized in the model by the lines:

CALL MPI_Init(ierr)

CALL MPI_Comm_rank(MPI_COMM_WORLD, rank, ierr)

CALL MPI_Comm_size(MPI_COMM_WORLD, size, ierr)

Here, the call to MPI_init is mandatory, while the two other lines are optional, but common. The call to MPI_init initializes the parallel region of an MPI-parallel program. This call initializes the communicator MPI_COMM_WORLD, which is pre-defined by MPI to contain all processes of the MPI-parallel program.

In the model code, we have to find the place where MPI_init is called to check how the parallelization is set up. In particular, we have to check if the parallelization is ready to be split into model tasks.

For this one has to check if MPI_COMM_WORLD, e.g. checking the calls to MPI_Comm_rank and MPI_Comm_size, or MPI communication calls in the code (e.g. MPI_Send, MPI_Recv, MPI_Barrier).

- If the model uses

MPI_COMM_WORLDwe have to replace this by a user-defined communicator, e.gCOMM_mymodel. One has to declare this variable in a module and initialize it asCOMM_mymodel=MPI_COMM_WORLD. This change must not influence the execution of the model. It can be useful to do a test run to check for this. - If the model uses a different communicator, one should take note of its name (below we refer to it as

COMM_mymodel). We will then overwrite it to be able to represent the ensemble of model tasks. Please check if COMM_mymodel is identical to MPI_COMM_WORLD, thus including all processes of the program. If not, this is a special case, which we discuss further below.

Now, COMM_mymodel will be replaced by the communicator that represents the ensemble of model tasks. For this we

- Adapt

init_parallel_pdafso that at the end of this routine we setCOMM_mymodel=COMM_model. Potentially, also set the rank and size variables of the model, respectively, bymype_modelandnpes_model.

Finally, we have a ensure that the number of model tasks is correctly set. In the template and tutorial codes, the number of model tasks is specified by the variable n_modeltasks. This has to be set before the operations on communicators are done in init_parallel_pdaf. In the tutorial code we added a command-line parsing to set the variable (it is parsing for dim_ens). One could also read the value from a configuration file.

If the program is executed with these extensions using multiple model tasks, the issues discussed in 'Compiling the extended program' can occur. This one has to take care about which processes will perform output to the screen or to files.

Adaptions for a serial model

The adaptions in short

If you are experienced with MPI, the steps are the following using the files mod_parallel_pdaf.F90, init_parallel_pdaf.F90, finalize_pdaf.F90 and parser_mpi.F90 from tutorial/online_2D_serialmodel.

- Insert

CALL init_parallel_pdaf(0, 1)at the beginning of the main program. This routine will also callMPI_Init. - Insert

CALL finalize_pdaf()at the end of the main program. This routine will also finalize MPI. - The number of model tasks in variable

n_modeltasksis determined ininit_parallel_pdafby command line parsing (it is parsing fordim_ens, thus setting-dim_ens N_MODELTASKS, where N_MODELTASKS should be the ensemble size). One could also read the value from a configuration file.

Details on adapting a serial model

If the numerical model is not parallelized (i.e. serial), we need to add the parallelization for the ensemble. We follow here the approach used in the tutorial code tutorial/online_2D_serialmodel. The files mod_parallel_pdaf.F90, init_parallel_pdaf.F90, finalize_pdaf.F90 (and parser_mpi.F90) can be directly used. Note, the init_parallel_pdaf uses command-line parsing (from parser_mpi.F90) to read the number of model tasks (n_modeltasks) from the command line (specifying -dim_ens N_MODELTASKS, where N_MODELTASKS should be the ensemble size). One can replace this, e.g., by reading from a configuration file.

In the tutorial code, the parallelization is simply initialized by adding the line

CALL init_parallel_pdaf(0, 1)

into the source code of the main program. This is done at the very beginning of the functional code part.

The initialization of MPI itself (by a call to MPI_Init) is included in init_parallel_pdaf.

The finalization of MPI is included in finalize_pdaf.F90 by a call to finalize_parallel(). The line

CALL finalize_pdaf()

should be inserted at the end of the main program.

With theses changes the model is ready to perform an ensemble simulation.

If the program is executed with these extensions using multiple model tasks, the issues discussed in 'Compiling the extended program' can occur. This one has to take care about which processes will perform output to the screen or to files.

Further Information

Communicators created by init_parallel_pdaf

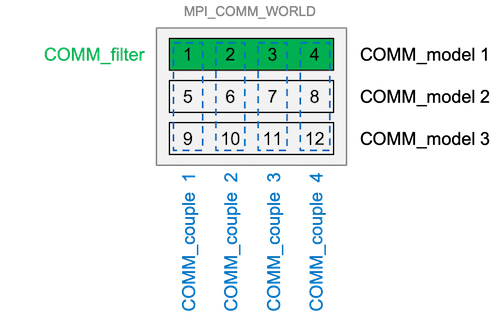

MPI uses so-called 'communicators' to define groups of parallel processes. These groups can then conveniently exchange information. In order to provide the 2-level parallelism for PDAF, three communicators are initialized that define the processes that are involved in different tasks of the data assimilation system.

The required communicators are initialized in the routine init_parallel_pdaf. They are called

COMM_model- defines the groups of processes that are involved in the model integrations (one group for each model task)COMM_filter- defines the group of processes that perform the filter analysis stepCOMM_couple- defines the groups of processes that are involved when data are transferred between the model and the filter. This is used to distribute and collect ensemble states.

Figure 1: Example of a typical configuration of the communicators using a parallelized model. In this example we have 12 processes over all, which are distributed over 3 model tasks (COMM_model) so that 3 model states can be integrated at the same time. COMM_couple combines each set of 3 communicators of the different model tasks. The filter is executed using COMM_filter which uses the same processes of the first model tasks, i.e. COMM_model 1 (Figure credits: A. Corbin)

An important aspect is that the template version of init_parallel_pdaf itself uses MPI_COMM_WORLD and splits this to create the three communicators.

Arguments of init_parallel_pdaf

The routine init_parallel_pdaf has two arguments, which are the following:

SUBROUTINE init_parallel_pdaf(dim_ens, screen)

dim_ens: An integer defining the ensemble size. This allows to check the consistency of the ensemble size with the number of processes of the program. If the ensemble size is specified after the call toinit_parallel_pdaf(as in the example) it is recommended to set this argument to 0. In this case no consistency check is performed.screen: An integer defining whether information output is written to the screen (i.e. standard output). The following choices are available:- 0: quite mode - no information is displayed.

- 1: Display standard information about the configuration of the processes (recommended)

- 2: Display detailed information for debugging

Compiling and testing the extended program

To compile the model with the adaption for modified parallelization, one needs to ensure that the additional files (init_parallel_pdaf.F90, mod_parallel_pdaf.F90 and potentially parser_mpi.F90) are included in the compilation.

If a serial model is used, one needs to adapt the compilation to compile for MPI-parallelization. Usually, this is done with a compiler wrapper like 'mpif90', which should be part of the OpenMP installation. If you compiled a PDAF tutorial case before, you can check the compile settings for the tutorial.

One can test the extension by running the compiled model. It should run as without these changes, because mod_parallel defines by default that a single model task is executed (n_modeltasks=1). If screen is set to 1 in the call to init_parallel_pdaf, the standard output should include lines like

Initialize communicators for assimilation with PDAF

PE configuration:

world filter model couple filterPE

rank rank task rank task rank T/F

----------------------------------------------------------

0 0 1 0 1 0 T

1 1 1 1 2 0 T

2 2 1 2 3 0 T

3 3 1 3 4 0 T

These lines show the configuration of the communicators. This example was executed using 4 processes and n_modeltasks=1, i.e. mpirun -np 4 ./model_pdaf -dim_ens 4 in tutorial/online_2D_parallelmodel. (In this case, the variables npes_filter and npes_model will have a value of 4.)

To test parallel model tasks one has to set the variable n_modeltasks to a value larger than one. Now, the model will execute parallel model tasks. For n_modeltasks=4 and running on a total of 4 processes the output from init_parallel_pdaf will look like the following:

Initialize communicators for assimilation with PDAF

PE configuration:

world filter model couple filterPE

rank rank task rank task rank T/F

----------------------------------------------------------

0 0 1 0 1 0 T

1 2 0 1 1 F

2 3 0 1 2 F

3 4 0 1 3 F

In this example only a single process will compute the filter analysis (filterPE=.true.). There are now 4 model tasks, each using a single process. Thus, both npes_filter and npes_model will be one.

Using multiple model tasks can result in the following effects:

- The standard screen output of the model can by shown multiple times. For a serial model this is the case, since all processes can now write output. For a parallel model, this is due to the fact that often the process with

rank=0performs screen output. By splitting the communicatorCOMM_model, there will be as many processes with rank 0 as there are model tasks. Here one can adapt the code to usemype_world==0frommod_parallel_pdaf. - Each model task might write file output. This can lead to the case that several processes try to generate the same file or try to write into the same file. In the extreme case this can result in a program crash. For this reason, it might be useful to restrict the file output to a single model task. This can be implemented using the variable

task_id, which is initialized byinit_parallel_pdafand holds the index of the model task ranging from 1 ton_modeltasks.- For the ensemble assimilation, it can be useful to switch off the regular file output of the model completely. As each model tasks holds only a single member of the ensemble, this output might not be useful. In this case, the file output for the state estimate and perhaps all ensemble members should be done in the pre/poststep routine (

prepoststep_pdaf) of the assimilation system. - An alternative approach can be use run each model task in a separate directory. This needs an adapted setup. For example, for the tutorial in

tutorial/online_2D_parallelmodel:- create sub-directories

x1,x2,x3,x4 - copy

model_pdafinto each of these directories - for ensemble size 4 and each model task using 2 processes, we can now run

mpirun -np 2 ./x1/model_pdaf -dim_ens 4 : \ -np 2 ./x2/model_pdaf -dim_ens 4 : \ -np 2 ./x3/model_pdaf -dim_ens 4 : \ -np 2 ./x4/model_pdaf -dim_ens 4

- create sub-directories

- For the ensemble assimilation, it can be useful to switch off the regular file output of the model completely. As each model tasks holds only a single member of the ensemble, this output might not be useful. In this case, the file output for the state estimate and perhaps all ensemble members should be done in the pre/poststep routine (

Special Case

COMM_mymodel does not include all processes

If the parallelized model uses a communicator that is named different from MPI_COMM_WORLD (we use COMM_mymodel here), there can be two cases:

- The easy case, described above is that

COMM_mymodelincludes all processes of the program. Often this is set asCOMM_mymodel = MPI_COMM_WORLDandCOMM_mymodelis introduced to have the option to define it differently. - In some models

COMM_mymodelis introduced to only represent a sub-set of the processes inMPI_COMM_WORLD. A use cases for this are coupled models, like atmosphere-ocean models. Here, the ocean might use a sub-set of processes and the program defines separate communicators for the ocean and atmosphere. There are also models that use a few of the processes to perform file operations with a so-called IO-server. In this case, the model runs only on nearly all processes andCOMM_mymodelwould be defined accordingly.

For case 2, one needs to edit `init_parallel_pdaf.F90':

- Include the PDAF module with

USE PDAF - Replace

MPI_COMM_WORLDin all cases byCOMM_mymodel. - At the end of

init_parallel_pdafset the main communicator for PDAF byCALL PDAF_set_comm_pdaf(COMM_ensemble)