| Version 5 (modified by , 8 months ago) ( diff ) |

|---|

Offline Mode: Initializing the parallelization for PDAF

Offline Mode: Implementation Guide

- Main page

- Initializing the parallelization

- Initialization of PDAF

- Implementation of the analysis step

- Memory and timing information

Contents of this page

Overview

The PDAF release provides template code for the offline mode in templates/offline. We refer to this code to use it as a basis.

If the template code in templates/offline or the tutorial implementation tutorial/offline_2D_parallel is used as the base for the implementation, it should be possible to simply keep this part of the program, i.e. the call to init_parallel_pdaf unchanged and directly proceed to the initialization of PDAF for the offline mode.

Like many numerical models, PDAF uses the MPI standard for the parallelization. PDAF requires for the compilation that an MPI library is available. In any case, it is necessary to execute the routine init_parallel_pdaf as described below.

For completeness, we describe here the communicators required for PDAF as well as the template initialization routine.

Three communicators

MPI uses so-called 'communicators' to define sets of parallel processes. As the ensemble integrations are performed externally to the assimilation program in the case of the offline mode, the communicators of the PDAF parallelization are only used internally to PDAF. However, as the communicators need to be initialized, we still describe them here.

In order to provide the 2-level parallelism, three communicators need to be initialized that define the processes that are involved in different tasks of the data assimilation system.

The required communicators are initialized in the routine init_parallel_pdaf and called

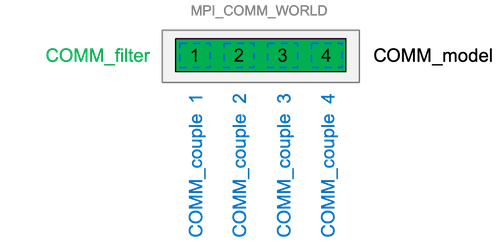

COMM_model- defines the processes that are involved in the model integrationsCOMM_filter- defines the processes that perform the filter analysis stepCOMM_couple- defines the processes that are involved when data are transferred between the model and the filter

The parallel region of an MPI parallel program is initialized by calling MPI_init. By calling MPI_init, the communicator MPI_COMM_WORLD is initialized. This communicator is pre-defined by MPI to contain all processes of the MPI-parallel program. In the offline mode, it would be sufficient to conduct all parallel communication using only MPI_COMM_WORLD. However, as PDAF uses the three communicators listed above internally, they have to be initialized. In general they will be identical to MPI_COMM_WORLD with the exception of COMM_couple, which is not used in the offline mode.

Figure 1: Example of a typical configuration of the communicators for the offline coupled assimilation with parallelization. In this example we have 4 processes. The communicators COMM_model, COMM_filter, and COMM_couple need to be initialized. However, COMM_model and COMM_filter use all processes (as MPI_COMM_WORLD) and COMM_couple is a group of communicators each holding only one process. Thus the initialization of the communicators is simpler than in case of the parallelization for the online coupling.

Initializing the communicators

The routine init_parallel_init, which is supplied with the PDAF package, initializes the necessary communicators for the assimilation program and PDAF. The routine should be added to the model usually directly after the initialization of the parallelization described above.

The routine init_parallel_pdaf also defines the communicators COMM_filter and COMM_couple that were described above. The provided routine init_parallel_init is a template implementation. For the offline mode, it should not be necessary to modify it!

For the offline mode, the variable n_modeltasks should always be set to one, because no integrations are performed in the assimilation program. The routine init_parallel_pdaf splits the communicator MPI_COMM_WORLD and defines the three communicators described above. In addition, the variables npes_world and mype_world are defined. These can be used in the user-supplied routines to control, for example, which process write information to the screen. The routine defines several more variables that are declared and held in the module mod_parallel. It can be useful to use this module with the model code as some of these variables are required when the initialization routine of PDAF (PDAF_init) is called.

The initialization of the communicators is different for the offline and online modes. You can find a template in the tutorial implementations for the offline case, e.g. in tutorial/offline_2D_parallel/.

Arguments of init_parallel_pdaf

The routine init_parallel_pdaf has two arguments, which are the following:

SUBROUTINE init_parallel_pdaf(dim_ens, screen)

dim_ens: An integer defining the ensemble size. This allows to check the consistency of the ensemble size with the number of processes of the program. For the offline mode, one should set this variable to 0. In this case no consistency check for the ensemble size with regard to parallelization is performed.screen: An integer defining whether information output is written to the screen (i.e. standard output). The following choices are available:- 0: quite mode - no information is displayed.

- 1: Display standard information about the configuration of the processes (recommended)

- 2: Display detailed information for debugging

Compiling and testing the assimilation program

IF one want to check the initialization of the parallelization, one can compile the assimilation program with just the call to init_parallel_pdaf as well as calls to MPI_init and MPI_finalize. One can test the extension by running the compiled assimilation program. If screen in the call to init_parallel_pdaf is set to 1 in the call to init_parallel_pdaf, the standard output should include lines like

PDAF: Initializing communicators

PE configuration:

world filter model couple filterPE

rank rank task rank task rank T/F

----------------------------------------------------------

0 0 1 0 1 0 T

1 1 1 1 2 0 T

2 2 1 2 3 0 T

3 3 1 3 4 0 T

These lines show the configuration of the communicators. This example was executed using 4 processes and n_modeltasks=1.